Google chrome gemini nano

Gemini Nano

Google is bringing Gemini Nano to the browser! As of version 126 of Chrome, Google embedded the nano version of their own large language model in its browser. This means that websites can use AI features on the local system, without requiring an internet connection. The LLM being a small and efficient version has both advantages and disavantages. No specialized hardware is required, as it can run locally on your average laptop. However the results of the model can also be considered 'nano', as we will see further down in this article.

The LLM is available in Chrome Canary, the version of Chrome for developers which contains daily updates and early releases. Note that not all features in Canary will make it to the production version of Chrome, so who knows if this will every be production ready.

How to get started?

- Download the latest version of Chrome Canary

- Navigate to chrome://flags and enable the Prompt API for Gemini Nano flag.

- Also enable the optimization-guide-on-device-model by setting it to Enabled BypassPerfRequirement

- Next up, visit chrome://components and search for Optimization Guide On Device Model component. Make sure to update this to the latest version.

And that's it, you should be all set!

Note

I couldn't get the AI component to work on my Lenovo Legion laptop. It did however work on a Lenovo Thinkpad. So depending on what machine you're tryin this on, your mileage may vary.

Did it work?

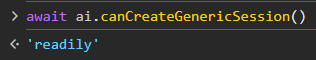

We can validate the AI component by running the following in the browser console:

Image: When this method reports readily, you are ready to go!

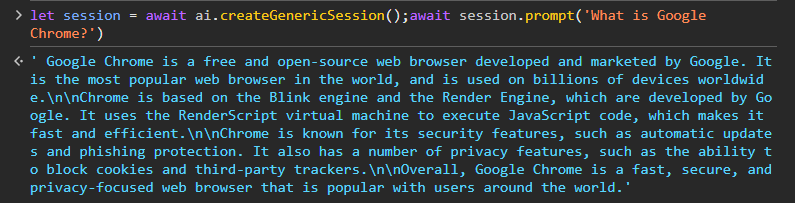

Sending our first prompt

Let's open the javascript console by pressing F12. Creating a generic AI session allows us to prompt Gemini Nano.

Image: Sending our first prompt via the browser console

Creating a chatbot demo

HTML

Using this local AI model we can create a crude chatbot. Let's do this in vanilla html/js/css, so we can all contain it in a single file. You can also find the end result on Github. We start out by defining our markup like so:

And continue by adding a script tag so that we can interact with the chatbot.

Javascript

CSS

Adding a little bit of style allows us to visualize if a chat message is from ourselves or from the AI.

Everything together

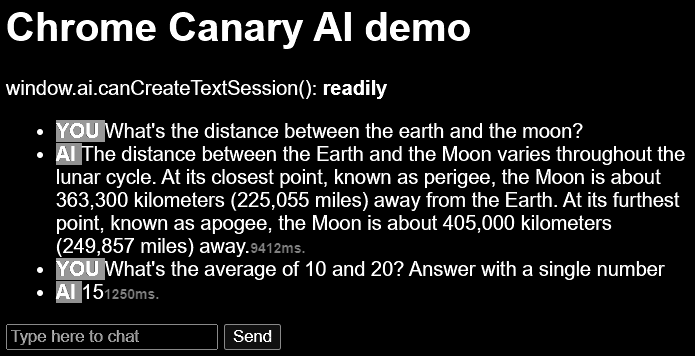

And there we go, this way we have an interactive chatbot which runs in the browser. For reference, I'm running this on a Lenovo Thinkpad 13th Gen Intel i7-1355U with 32Gb of RAM. You can see from the results here below that a short single word (or number) takes a good second to generate. For longer responses you can see it approaches 10 seconds fairly quick. With similar laptops you can't really use this model for longer or more complicated usecases. However, with the continuous increase in available compute, and the models getting more and more efficient, this can only become more impressive in the future.

Image: A small chat session requires some patience on my device

As mentioned before, you can find the source code for the chat application on Github. Please make sure you're running Chrome Canary 126 or later, with the correct flags enabled.

Further reading and relevant links

- https://muthuishere.medium.com/ai-within-your-browser-exploring-google-chromes-new-prompt-api-a5c2c6bd5b4c

- https://syntackle.com/blog/window-ai-in-chrome/